Machine Learning (ML), Artificial Intelligence (AI), and Generative Artificial Intelligence (GenAI) are transformative technologies that have the potential to revolutionize industries across the globe.

At the last AWS re:Invent, there were numerous updates related to ML/AI and everything associated with these technologies. I also decided to delve into these topics and immerse myself in this field.

I won't delve into explaining the meanings of ML, AI, DL(Deep Learning), and GenAI. However, I'd like to touch upon FMs and LLM as we will focus our attention there. I found myself losing the same question when I came across this topic in my reading or listening. :)

Foundational Models (FMs) within the AWS ecosystem represent fundamental structures and algorithms essential for diverse AI applications. These models, often created by industry-leading AI companies, are integral to the development and functionality of AWS services, shaping the landscape of artificial intelligence on the platform. In the context of Amazon Bedrock, Language Models (LMs) play a pivotal role. These LMs contribute to the service's linguistic capabilities, facilitating advanced language understanding and content generation within the AWS environment.

AWS provides various services for Machine Learning and Artificial Intelligence, including Amazon SageMaker, AWS DeepLens, AWS DeepComposer, Amazon Forecast and more. Familiarize yourself with the services available to determine which ones suit your specific needs.

Generative Artificial Intelligence (GenAI) is a type of artificial intelligence that can generate text, images, or other media using generative models. AWS offers a range of services for building and scaling generative AI applications, including Amazon SageMaker, Amazon Rekognition, AWS DeepRacer, and Amazon Forecast. AWS has also invested in developing foundation models (FMs) for generative AI, which are ultra-large machine learning models that generative AI relies on. AWS has also launched the Generative AI Innovation Center, which connects AWS AI and ML experts with customers around the world to help them envision, design, and launch new generative AI products and services. Generative AI has the potential to revolutionize the way we create and consume media, but it is important to use it responsibly and ethically.

Some examples GenAI: One of the most well-known examples of GenAI is ChatGPT, launched by OpenAI, which became wildly popular overnight and galvanized public attention. Another model from OpenAI, called text-embedding-ada-002, is specifically designed to work with embeddings a type of database specifically designed to feed data into large language models (LLM). However, it’s important to note that generative AI creates artifacts that can be inaccurate or biased, making human validation essential and potentially limiting the time it saves workers. Therefore, end users should be realistic about the value they are looking to achieve, especially when using a service as is.

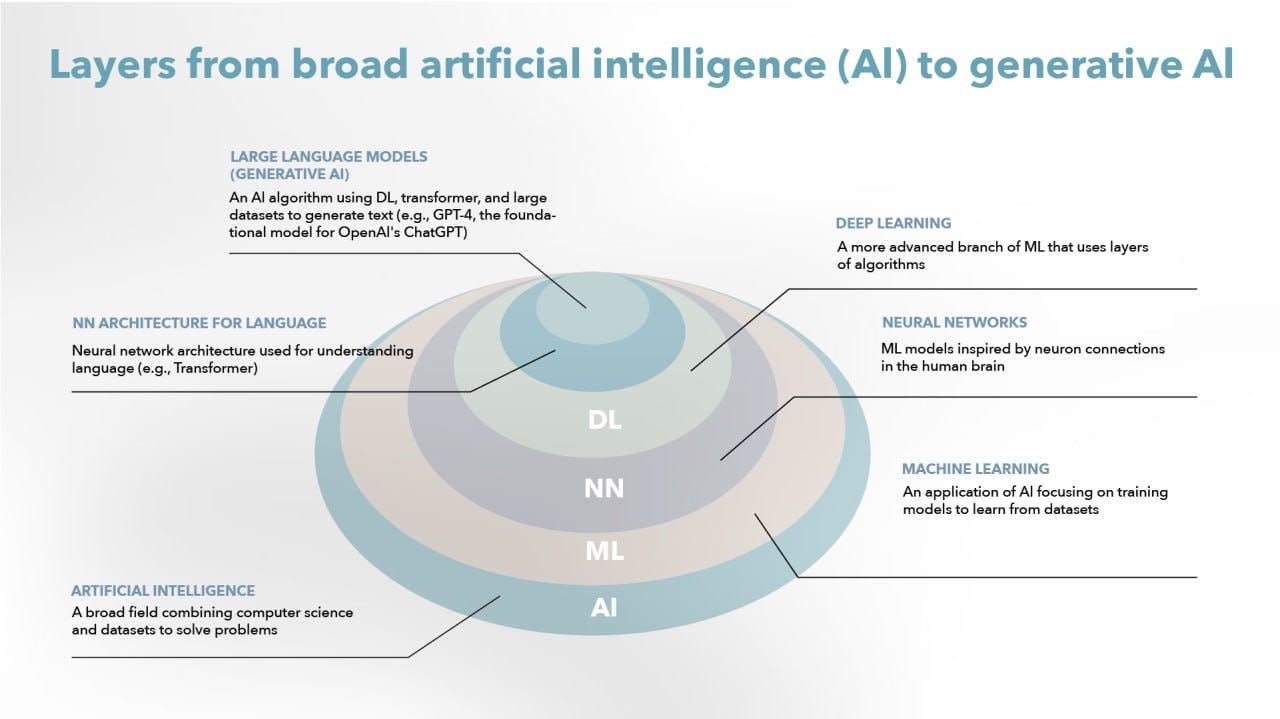

I've also delved a bit deeper into Broad AI when learning GenAI and I'd like to show this in the form of the following picture as it explains a lot.

Broad AI includes task-specific algorithms, Machine Learning (ML), and Deep Learning. These layers enable AI to perform tasks like image recognition, natural language processing, and complex pattern modeling.

The transition to GenAI involves Transfer Learning, Reinforcement Learning, and Autonomous Learning. These layers allow AI to apply knowledge across contexts, learn from interactions, and independently gather and learn from information.

So, the journey from Broad AI to GenAI represents significant leaps in AI capabilities, moving towards AI systems that can truly understand, learn, and adapt like a human brain.

Let's explore a couple of AWS services that, from my perspective, are among the more popular today.

Amazon SageMaker is a comprehensive platform that simplifies the machine learning workflow. It covers everything from data labeling and preparation to model training and deployment. Take advantage of SageMaker's Jupyter notebook integration for interactive data exploration and model development. The platform also supports popular ML frameworks like TensorFlow and PyTorch.

Amazon Q is a groundbreaking Generative AI assistant crafted with a focus on security and privacy. Its purpose is to unleash the transformative capabilities of this technology for employees within organizations of varying sizes and across diverse industries.

Introduces robust enhancements to the generative AI service, Amazon Bedrock.

Amazon Bedrock, an entirely managed service on AWS, provides access to extensive language models and other foundational models (FMs) from prominent artificial intelligence (AI) companies such as AI21, Anthropic, Cohere, Meta, and Stability AI, all consolidated through a unified API.

I would also like to share more information about Amazon Bedrock here about the innovations that were announced at the latest AWS re:Invent.

Fine-tuning for Amazon Bedrock:

Now, there are increased opportunities for model customization in Amazon Bedrock, featuring fine-tuning support for Cohere Command Lite, Meta Llama 2, and Amazon Titan Text models, with Anthropic Claude's support expected soon.

These recent enhancements to Amazon Bedrock significantly reshape how organizations, regardless of their size or industry, can leverage generative AI to drive innovation and redefine customer experiences.

AWS is compatible with all the leading deep-learning frameworks, facilitating their deployment. The deep-learning Amazon Machine Image, accessible on both Amazon Linux and Ubuntu, allows for the creation of managed, auto-scalable GPU clusters. This enables training and inference processes to be conducted at any scale. Also, AWS offers a range of AI services that allow you to integrate pre-trained models into your applications without the need for deep expertise in machine learning. Services like Amazon Rekognition for image and video analysis, Amazon Comprehend for natural language processing, and Amazon Polly for text-to-speech can enhance your applications with AI capabilities.

The best way to solidify your understanding of ML, AI, and GenAI on AWS is through hands-on projects. Start with simple projects and gradually increase complexity as you gain confidence. Use datasets available on platforms like Kaggle or create your own to train and test models.

Conclusion:

Embarking on a journey into Machine Learning, Artificial Intelligence, and Generative Artificial Intelligence on AWS is an exciting endeavor. By following these steps, you can lay a solid foundation for your understanding and proficiency in leveraging AWS services for ML and AI applications. Remember, the key to success is a combination of hands-on experience, continuous learning, and active engagement with the AWS community. Happy training!